The problem of using a pre-trained LLM directly

- might provide incorrect information due to training on biased or inaccurate data

- may not perform well on highly specialized topics that require deep domain knowledge

- cannot verify the accuracy of information in real-time

- lack of context and morden news, leading to irrelevant or inappropriate responses

Honestly, the above points alone are enough to prompt researchers to explore new technologies. Hence the rise of Retrieval-Augmented Generation (RAG) aims at solving the illusion of LLM inference.

Someone might ask that fine-tuning would solve a similar problem, but why is it so much less popular than RAG? Considering convenient, lower costs and dynamic updates, ordinary users would prefer to use RAG rather than fine-tuning a LLM model themselves.

What is RAG

Retrieval-Augmented Generation (RAG) is a modern technology that introduces an external information sources, providing an effective mitigation strategy for these problems.

Why and how it works

core idea

The core idea of RAG is to use a user-defined database, such as a vector db, to enrich the questions with prompts in order to get better answers. It utilizes LLM’s In-Context Learning to reduce the illusion, proposing a none-gradient approach.

work flows

Cited references: Retrieval-Augmented Generation for Large Language Models: A Survey

Naive RAG

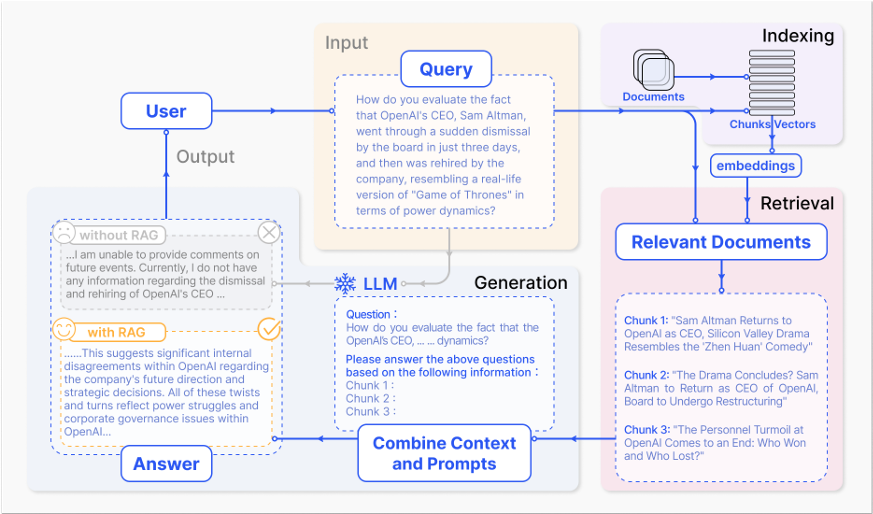

Let’s considering an example as follows. A user poses a question related to recent news. Since the LLM relies on pre-trained data and cannot provide the latest information, RAG compensates for this gap by fetching and integrating knowledge from external databases. It collects news articles relevant to the user’s query, combining them with the original question to form a comprehensive prompt, enabling the large model to generate a rich and insightful answer.

The above RAG example consists of three parts: indexing, retrieval and generation

Indexing starts with the cleaning and extraction of raw datain diverse formats like PDF, HTML, Word, and Markdown,which is then converted into a uniform plain text format. To accommodate the context limitations of language models, text is segmented into smaller, digestible chunks. Chunks are then encoded into vector representations using an embedding model and stored in vector database. This step is crucial for enabling efficient similarity searches in the subsequent retrieval phase.

Retrieval. Upon receipt of a user query, the RAG system employs the same encoding model utilized during the indexing phase to transform the query into a vector representation. It then computes the similarity scores between the query vector and the vector of chunks within the indexed corpus. The system prioritizes and retrieves the top K chunks that demonstrate the greatest similarity to the query. These chunks are subsequently used as the expanded context in prompt.

Generation. The posed query and selected documents are synthesized into a coherent prompt to which a large language model is tasked with formulating a response. The model’s approach to answering may vary depending on task-specific criteria, allowing it to either draw upon its inherent parametric knowledge or restrict its responses to the information contained within the provided documents. In cases of ongoing dialogues, any existing conversational history can be integrated into the prompt, enabling the model to engage in multi-turn dialogue interactions effectively.

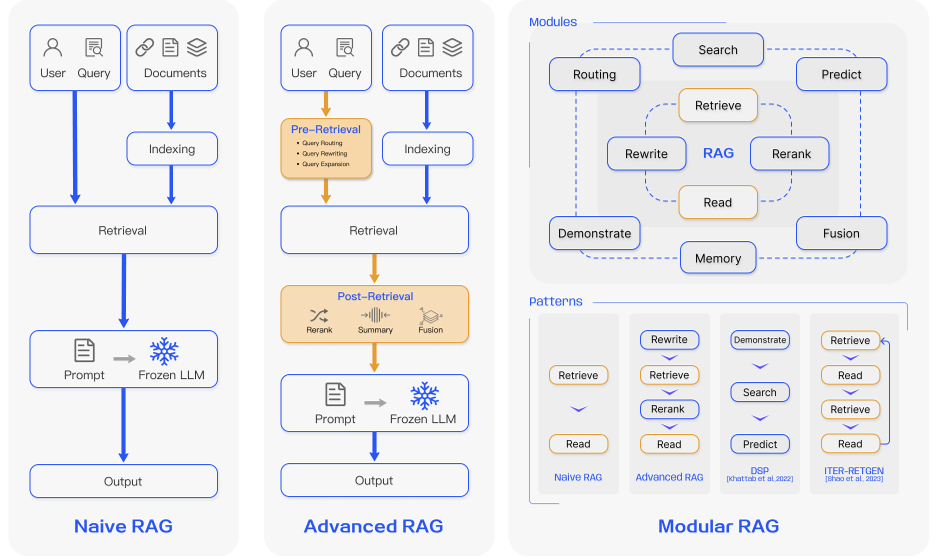

Not satisfied with this, the researchers began an effort to optimize their search for prompt, not just top-k search. The image below shows two naive-RAG optimizations

Advanced RAG

The advanced RAG introduces two new stages, Pre-Retrieval and Post-Retrieval, to enhance retrieval quality:

Pre-Retrieval Process: Focuses on improving the index and queries. Index optimization aims to enhance the quality of indexed content through methods like increasing data granularity, optimizing index structures, adding metadata, alignment optimization, and mixed retrieval. Query optimization clarifies and tailors the user’s original question for better search, using techniques like query rewriting, transformation, and expansion.

Post-Retrieval Process: Deals with effectively integrating retrieved context with the query. Directly inputting all relevant documents into the large model can lead to information overload. To mitigate this, methods introduced in this stage include reranking chunks and context compressing.

Modular RAG

As data types diversify, including audio, video, HTML, and HTTP connections, different tasks require specific modules rather than simple task addition. Researchers have proposed modularizing these functions and performing iterative retrieval until a relatively stable prompt is achieved (e.g., when recall changes are minimal). This refined prompt allows LLMs to capture information more accurately than before.

For more details, please read the cited paper yourself